Taming the AI Zoo: Understanding the Model Context Protocol (MCP)

The world of AI development is expanding faster than any one platform can contain. Every month brings new models, new APIs, and new ways to connect them to business systems. That variety fuels innovation, but it also creates complexity.

The Model Context Protocol (MCP), originally open-sourced by Anthropic in late 2024 and now supported across OpenAI’s Agents SDK, Microsoft Copilot Studio, and other major ecosystems, is emerging as a powerful answer to that complexity. MCP is an open interoperability standard designed to help AI agents, models, and tools communicate through a shared language rather than bespoke, one-off integrations.

This post explores what MCP is, the integration problem it solves, how it works in practice, and why its growing adoption makes it one of the most consequential developments in AI infrastructure today.

The Core Problem MCP Solves: Escaping the N x M Maze & Lock-In

The rapid growth of AI models presents a familiar challenge. Developers face an "N x M" integration puzzle: connecting numerous applications to diverse AI models (from OpenAI, Anthropic, etc.), often leading to custom code complexity and vendor lock-in. This mirrors historical middleware problems in enterprise software, where standardized protocols eventually emerged to simplify system connectivity.

Recognizing this early, AWS demonstrated foresight with Amazon Bedrock, providing a unified API within its ecosystem to streamline access to multiple foundation models and manage this complexity for AWS users.

Now, the Model Context Protocol (MCP) emerges as a complementary approach aiming for an open, cross-platform standard. Like earlier middleware solutions, MCP seeks to act as a universal translator. It defines a common protocol for models and tools to exchange context and actions, making it possible for one well-defined connector to operate across multiple models and environments.

Major vendors are aligning around this approach:

Anthropic continues to maintain the open MCP specification and reference implementations.

OpenAI integrated MCP directly into its Agents SDK and added remote MCP server support via its Responses API (May 2025), enabling secure tool connections hosted anywhere.

AWS, through Amazon Bedrock, has long pursued similar goals, offering unified APIs for models from Anthropic, Cohere, Meta, and others to simplify multi-model access and interoperability.

Microsoft introduced MCP support in Copilot Studio and Azure AI Services to simplify multi-server agent architectures.

Ultimately, simplifying model access, whether through integrated platforms like Bedrock or potential open standards like MCP, is crucial. It lays the groundwork for building more powerful and versatile agentic AI systems that can reliably interact with the data and tools they need.

MCP in Practice: Simplifying AI Integration

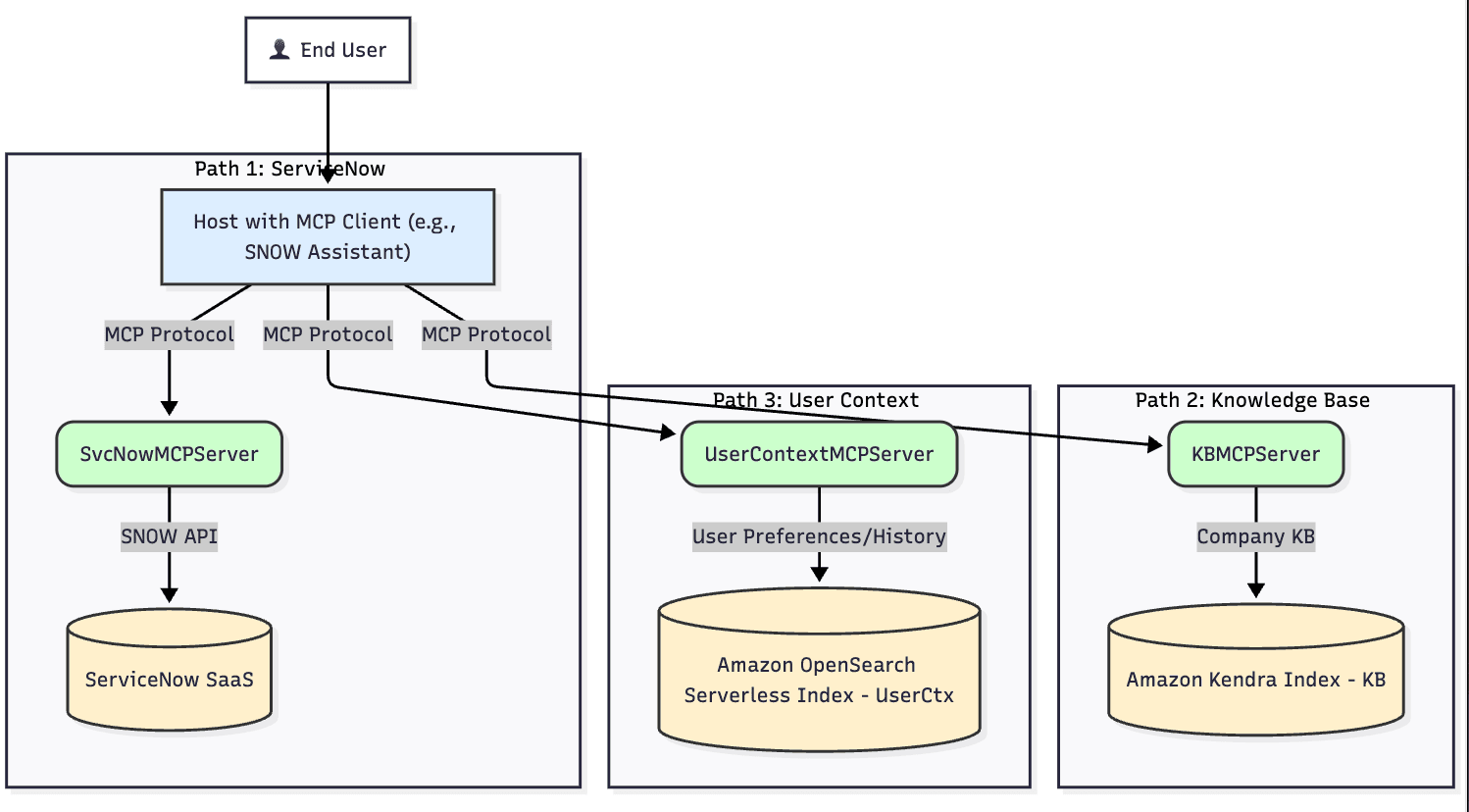

So, how does MCP function technically? In essence, it relies on two components: MCP Servers and MCP Clients.

MCP Servers wrap tools or data sources—like ServiceNow, Salesforce, or internal APIs—behind a standard capability layer.

MCP Clients sit within an agent or application, issuing requests and interpreting responses using the shared MCP format.

Communication runs over JSON-RPC 2.0, handling discovery, negotiation, and context exchange. Once connected, the agent can perform standardized actions (get_ticket, create_record, query_dataset) without touching raw APIs.

Example: Streamlining Enterprise AI with AWS

Now, let's illustrate with ServiceNow, focusing on why an AI agent adds value. Imagine building an AI agent to enhance IT support. Unlike a simple script, this agent needs to:

Understand natural language: Interpret potentially vague user requests ("My laptop is acting weird again").

Utilize Context: Access organizational knowledge bases, recall the user's previous interactions or open tickets, and understand the user's specific needs and permissions.

Reason and Decide: Based on this context, determine the best course of action – perhaps finding relevant troubleshooting articles, querying existing ServiceNow ticket statuses, or creating a new incident ticket with appropriate categorization.

Without MCP, developing this agent is complex.

You need to code the AI's reasoning logic and write custom integration code for each tool it interacts with, like ServiceNow's specific REST APIs.

Updating the AI's access to tools or adding new ones requires significant effort beyond just refining the AI's intelligence.

With MCP, the AI agent still performs all the crucial understanding and reasoning. It processes the user's request, consults its knowledge sources, and decides, "Okay, based on this user's history and the symptoms described, I need to check the status of their previous incident INC12345 in ServiceNow.”

Here’s where MCP simplifies things:

The AI agent, having made its decision, uses its integrated MCP Client.

It issues a standard MCP command (e.g., get_incident_details with the ID) to the ServiceNow MCP Server.

The MCP Server handles the specific ServiceNow API interaction and returns the status in a standard MCP format.

MCP standardizes the action execution part, not the AI's thinking. This allows developers to focus more on improving the agent's intelligence, context handling, and decision-making, rather than getting bogged down in repetitive API integration tasks. Adding access to another tool (such as Jira or a knowledge base) becomes simpler – simply connect to the relevant MCP Server using the standard protocol. This streamlined interaction is vital for building sophisticated, multi-tool AI agents.

Recent updates to OpenAI’s Responses API (mid-2025) enable MCP Servers to run remotely, allowing enterprises to host these connectors within their own AWS accounts. This gives organizations the ability to operate MCP servers entirely within their own walled garden and assert direct control and ownership over the integration layer. By doing so, they can ensure full provenance of their servers, align management with existing organizational policies, and significantly reduce exposure to supply chain vulnerabilities and external attacks, all while maintaining interoperability through MCP.

Why You Should Pay Attention to MCP

Originating from Anthropic’s open-source initiative, the Model Context Protocol (MCP) is quickly emerging as a foundational standard for connecting AI models to the tools and data they rely on. With OpenAI, Anthropic, and AWS all aligning around the principles of interoperability, MCP is gaining real momentum across the AI ecosystem.

Successful standardization could simplify AI development, boost interoperability, and accelerate the creation of capable AI agents. This simplification is key: it could significantly reduce the overhead for building sophisticated agents, potentially making advanced agentic AI – currently often the domain of specialized startups and ISVs – more accessible for mainstream enterprise adoption.

If your organization is exploring AI or developing an enterprise-grade AI strategy, OpsGuru’s experts can help. Explore our AI services to discover how we help teams design, deploy, and scale secure, interoperable, and agentic architectures on AWS.